Bit-sized: Unit Testing Leadership: A Thought Experiment

Debugging the Management Layer: What If We Tested Leadership Like We Test Code?

👋 Hey there! You’re reading The Human Side of Tech—where we dive into leadership, engineering culture, and the messy magic in between.

Sounds good? Subscribe here for free to get future posts straight to your inbox (no spam, ever).

Read Time: 6 minutes

Engineering teams verify code functionality through various types of tests, but leadership behavior often goes unverified beyond performance reviews and promotion cycles. This creates a double standard: rigorous validation for code, but largely faith-based validation for leadership. By treating leadership principles as function signatures with expected outputs and artifacts, we might identify and fix “leadership bugs” before they cascade into organizational problems.

In this article, we will first highlight the existing verification gap in leadership practices, explore how unit-testing leadership behaviors could close that gap, discuss practical limitations, and suggest ways to practically implement and iterate upon this approach.

The Verification Gap

I was stumbling across a Substack note which got me thinking: “How do we actually test leadership?”

Many organizations define principles. At Kleinanzeigen, our SHAPE values serve as our guiding leadership principles. These values factor into our hiring decisions, regular feedback sessions, and performance reviews. They help us to evaluate leadership potential and performance against a consistent framework.

Solutions-oriented

Honest feedback

Act ahead with accountability

Prioritize impact

Empower our people

Throughout the industry, there remains a challenge of verification: these leadership evaluations, while valuable, typically rely heavily on impressions and qualitative feedback rather than concrete observable artifacts. They also tend to happen at scheduled intervals rather than a continuous week-by-week rhythm. Makes sense: Giving written, weekly feedback, even for just 3 people, will quickly result in an explosion of effort, when considering every other person doing the same.

This creates a strange inconsistency: we trust our system design only after thorough verification, but we trust our leadership system largely on faith and good intentions. Yet the cost of a leadership failure is typically higher than a code failure.1 It affects team morale, user experience, and strategic outcomes simultaneously—some more direct, some more indirect.

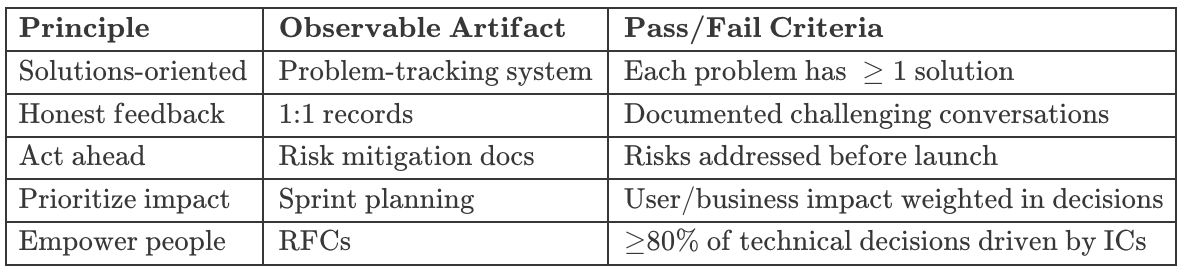

With clear principles like SHAPE already in place, we have a solid starting point for identifying observable artifacts that could make leadership behaviors as verifiable as our code.

From Theory to Observable Behavior

What would it look like to treat a leadership principle as a testable specification? Take our "Solutions-oriented" value:

function solutionsOriented(problem): Solution[] {

// Must return at least one solution per problem

// Solutions must address root causes

// Implementation must avoid blame narratives

return solutions;

}Instead of assuming compliance, we could verify it through specific artifacts:

Writing down these examples reminds me that I drive too many RFCs myself, rather than delegating them down (or sideways). One nice side-effect is mapping these leadership principles to smaller, observable artifacts is that you expose yourself more frequently to them. Values and Principles mean only so much if you do not start actually basing your day-by-day actions on them. Building the habit to think more often about them is the hard part.

From Failed Tests to Behavioral Debugging

If we detected a pattern of such failures, we might approach them the same way we approach code bugs:

Debug: Identify which principle is being violated and why. In my RFC example, the principle was clear (Empower people), and the reason (wanting to ensure thoroughness and quality) wasn't about technical capability but about my own control tendencies.

Patch: Define the corrective behavior specifically. Not "Delegate more," but "For all RFCs, identify an IC owner who drives the process with my support, rather than me driving it directly."

Regression Test: Create a scenario to verify the fix. In this case, assigning the next RFC opportunity to a team member and providing support rather than taking the lead myself.

While treating leadership behaviors as testable specifications offers clear benefits, it’s important to recognize the potential pitfalls. Just as high code coverage doesn’t always indicate high-quality tests, unit-testing leadership behaviors comes with its own limitations. Let’s examine where this approach might face challenges:

The Practical Limitations

At this point, you might have raised your hand and said: “Wait a second, this will backfire!”:

• Measurement effects: Would leaders start optimizing for test-passing rather than actual leadership? (👋 Goodhart’s Law!)

• False binaries: Many leadership situations involve legitimate trade-offs between principles.

• Documentation burden: Do we really want to add more administrative overhead?

Goodhart’s Law observes that once a metric becomes a target, it loses its effectiveness as people find ways to game the system, causing the measurement to no longer reflect the original goal it was designed to track.

These are valid points. Our current people management systems exist for good reasons. Traditional leadership evaluations capture nuance that binary tests might miss. And crucially, humans aren’t code. Their motivation and buy-in matter in ways that programs’ don’t.

That said, I wonder if there’s a middle ground where we maintain our existing leadership evaluation approaches while adding more verification similar to how we might add automated tests to supplement or relieve manual QA.

Where This Fits in the Leadership Landscape

The tech industry struggles with measuring leadership effectiveness. Current approaches typically rely on three dominant paradigms:

Performance-based evaluations that measure outcomes (DORA, business results) but not the specific behaviors that created them

Skills-based frameworks that assess competencies in structured scenarios but miss day-to-day leadership behaviors

Values-based assessments that depend on subjective interpretations of alignment with company principles

What’s notably missing is a systematic approach to connect stated leadership principles with observable behaviors and artifacts.2 While organizations increasingly use metrics like employee retention and satisfaction to evaluate leadership impact, these are primarily lagging indicators that don’t provide real-time feedback on specific leadership behaviors.

Rather than relying solely on abstract principles or subjective impressions, recent research shows that translating leadership values into concrete, observable behaviors-and systematically measuring their outcomes-results in more effective leadership and improved team performance.3

The unit testing approach differs by creating direct links between principles and observable artifacts. Rather than waiting for annual reviews or quarterly engagement surveys to reveal leadership issues, it proposes a verification system that could catch and address problems before they affect team performance or morale.

This doesn’t aim to replace existing evaluation frameworks but to complement them. Similarly to how automated tests don’t replace code reviews but provide an additional layer of quality assurance that catches different types of issues.

Where This Might Lead

For now, this remains a thought experiment that I'm still exploring. I'm planning to start small by testing it on myself. I maintain an “achievement” log for each calendar week, writing down important outcomes, goals met, progress. For the next months, I will start categorizing those via our SHAPE values, reference observable artifacts and see whether this approach will yield insights for myself—do I have blindspots (like my RFC example) or am I excelling at a specific one?

I'm particularly interested in whether “leadership bugs” follow the same patterns as code bugs—do they cluster in specific areas? Are there “edge cases” that commonly trigger failures? And are there equivalent “design patterns” that make certain principles easier to uphold?

While I’ve framed this from a management perspective, these principles apply equally to ICs. If you're aiming to grow your impact or advance toward leadership roles, consider tracking a specific leadership value in your weekly activities. Fostering these habits early not only strengthens your leadership potential but prepares you effectively for future roles.

Perhaps there’s a way to bring more engineering discipline to leadership without losing the human element that makes leadership work in the first place.

If you’ve tried something similar or have thoughts on potential pitfalls I’ve missed, I’d be curious to hear about your experiences. Leave a comment or get in touch with me!

Enjoyed this article? Leave a like and restack it, mentioning your favorite takeaway!

Thanks,

Tim

Kaiser, R. B., Hogan, R., & Craig, S. B. (2008). Leadership and the Fate of Organizations. American Psychologist

McKinsey & Company. (2022). What’s missing in leadership development?

Nöthel, S., Nübold, A., Uitdewilligen, S., Schepers, J., & Hülsheger, U. (2023). Development and validation of the adaptive leadership behavior scale (ALBS). Frontiers in Psychology

Fascinating concept, Tim, especially because you’re embedding yourself in the experiment! Alongside regression testing, it might be valuable to stress test leadership: observing how leaders respond under high workloads or constrained resources. With the increasing complexity of workplaces, edge and corner cases are becoming more relevant--not just in software but in human performance as well.

I’d also bring “unbossing” into the conversation, as more companies flatten hierarchies and phase out middle management. That shift makes it even more important to proactively support emerging leaders and identify those struggling early in the cycle. Unfortunately, skip-level reviews (when they happen at all) too often reflect org politics more than actual performance.

My question for you: Could AI tools help simulate or support your testing approach? I haven't used these tools myself, but am curious about tools like BetterUp, Leena AI, Lattice, and Eightfold AI.

I look forward to reading more about your experiment :-D